UX Roundup: AI Workflows | One-Click Songs | Auto-Slideshow | Rewording Questions | Multiple AIs

- Jakob Nielsen

- Aug 18, 2025

- 13 min read

Summary: Rethinking workflows for AI | Lowering the barrier to creating content with AI | NotebookLM automatically creates a slideshow from an article | Changing the wording of questions in standard surveys | The market will support multiple AI models

UX Roundup for August 18, 2025. (GPT Image-1)

Rethinking Workflows For AI

We must redesign our old workflows for AI, rather than simply staying within existing boxes and suboptimizing those tasks. (GPT Image-1)

Most organizations approach AI adoption with painful linearity. They identify Task A, sprinkle some AI magic dust on it, achieve a modest 20% improvement, then move to Task B. Rinse and repeat. This task-by-task optimization is comfortable, measurable, and fundamentally wrong-headed. It’s like teaching a robot to use a typewriter more efficiently instead of reimagining document creation entirely.

Ask the big question: why are we doing this task in the first place? To realize AI’s humongous potential, use it to address the big question rather than the legacy tasks which were designed for human limitations. (GPT Image-1)

A “cowpath” is any business process that evolved organically based on the constraints of manual human cognition. These legacy workflows are typically sequential, fragmented across departments, and laden with checkpoints designed to mitigate human error. They represent the path of least resistance for information flow in a pre-AI world.

The cows move faithfully along the same cowpath they’ve always used. Even though it’s traditional, it’s not the most efficient way to move from point A to point B when we introduce new technology. (GPT Image-1)

Paving this cowpath means using AI to automate or accelerate individual steps within that flawed, human-centric process. The business world is saturated with examples of this thinking, filling thousands of vendor case studies and corporate press releases.

Meeting Summarization: A team uses an AI tool to automatically generate minutes from a video call. This is paving the cowpath. The fundamental process — a synchronous meeting with multiple participants followed by a static summary document — remains unchanged. A reinvented workflow might involve an AI agent that synthesizes relevant information from multiple sources before a meeting, distributes it as a pre-read, and captures only the key decisions and action items, making the meeting itself shorter or even unnecessary.

Invoice Processing: A finance department implements an AI system to automatically scan invoices and enter data into the accounting system, reducing manual labor. This is a classic paved cowpath. The underlying process of generating, sending, receiving, and approving individual invoices persists. A reinvented workflow would redesign the entire procurement-to-payment value chain, perhaps using smart contracts or an integrated platform where transactions are recorded and reconciled automatically, eliminating the concept of a standalone "invoice" altogether.

Customer Support Chatbots: A company deploys a chatbot to answer frequently asked questions more quickly than a human agent. While this can improve response times, it is still paving the cowpath of reactive problem-solving. A truly transformed, AI-native system would analyze user behavior, product telemetry, and support tickets to proactively identify points of confusion and preempt customer questions before they are even asked.

These examples all share a common feature: they use new technology to do an old thing slightly better, rather than enabling a new and superior way of achieving the core business goal

The persistence of the paved cowpath approach is not accidental. It is seductive to organizations for 4 clear, rational reasons.

It imposes a low cognitive load on leadership. It does not require executives to fundamentally rethink their business model, organizational structure, or core processes. AI is treated as a bolt-on enhancement that can be managed within existing departmental budgets and hierarchies. A marketing team buys a marketing AI, and a finance team buys a finance AI, with no need for complex, cross-functional strategic alignment.

It provides easy metrics. Success can be measured with simple, familiar efficiency KPIs: “time saved per task,” “cost reduced per transaction,” or “customer support tickets closed per hour.” These metrics are comforting because they show a positive, linear improvement. A company like Cognizant can report saving 90 minutes per task on preparing quarterly business reviews and declare a victory. While a genuine improvement, this metric measures the efficiency of a legacy process, not its ultimate effectiveness or its contribution to competitive advantage. It mistakes activity for progress.

The approach is heavily promoted by the vendor ecosystem. Many technology vendors design and market these task-level solutions because they are easier to sell and implement than a comprehensive business transformation. It is far simpler to sell a tool that automates one step in a legal department's workflow than it is to sell a platform that requires the entire legal function to be redesigned.

The siloed structure of the modern enterprise creates a systemic trap that encourages this flawed thinking. Corporations are organized into functional departments like Finance, HR, and Marketing. Authority and budgets are allocated within these silos, and managers are incentivized to optimize the performance of their specific function. Consequently, they seek out and procure AI tools that promise to improve tasks within their remit: one AI for automating financial reports, another AI for screening resumes, and a third AI for generating ad copy. This local optimization reinforces the organizational silos and prevents the cross-functional, first-principles thinking required for true, end-to-end workflow reinvention.

These four factors create a powerful gravitational pull toward incrementalism, leading to a strategic dead end.

Four organizational reasons most companies focus their AI efforts on improving low-level tasks within existing workflows instead of aiming for transformational change. (GPT Image-1)

The primary barrier to AI transformation is therefore not technological; it is organizational and political. The structure that makes a company efficient at its old way of doing business is the very thing that prevents it from adopting a new, superior AI-First workflow.

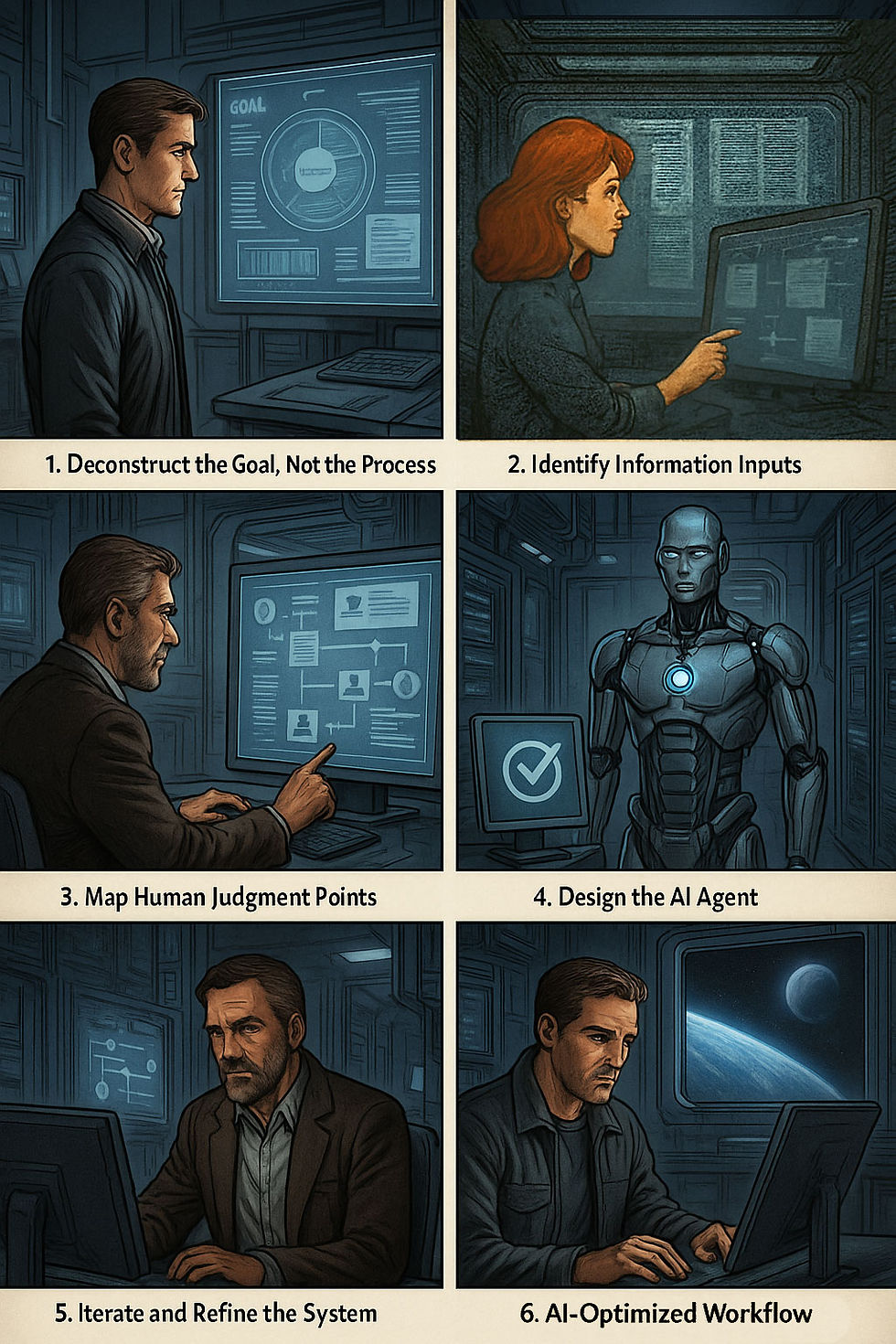

Moving from paved cowpaths to AI highways requires a structured, disciplined process. The following framework provides a prescriptive, step-by-step method for redesigning a business process from first principles.

Optimize the entire workflow for AI, rather than just the individual steps in the old process. (GPT Image-1)

Deconstruct the Goal, Not the Process: The starting point must be the ultimate business outcome, not the existing process. Instead of asking “How can we make our RFP response process faster?”, the question must be “What is the most effective way to win this government contract?”. This reframing is essential.

Identify Information Inputs: Catalog every piece of data and knowledge required to achieve the goal. For the goal of winning a contract, this would include the RFP documents, all past successful and unsuccessful proposals, detailed competitor intelligence, product specifications, pricing models, and all relevant legal and compliance regulations.

Map Human Judgment Points: Critically analyze the end-to-end workflow to identify the specific moments where human strategic decision-making, ethical oversight, creative input, or final approval are absolutely essential. These are the “human-in-the-loop” points where people must exercise agency and judgment. For example, the final decision on pricing strategy or the approval of the executive summary's tone would likely remain human judgment points.

Design the AI Agent: Architect an integrated AI agent that is responsible for executing all other tasks. This agent should be designed to take the identified information inputs and autonomously perform all non-judgment activities. This includes reading and parsing the RFP, synthesizing relevant information from past proposals, drafting initial responses for each section, and assembling a complete first draft of the proposal. This draft is then presented to the human expert for review at the designated judgment points. Platforms are now emerging that are designed specifically for this purpose, enabling companies to build and deploy custom AI agents for specific jobs without extensive coding.

Iterate and Refine the System: The process does not end with the first draft. The feedback, corrections, and strategic decisions made by the human expert at the judgment points are not just used for that single proposal. They are fed back into the system to refine and improve the AI agent's performance over time. This creates a continuous learning loop where the human-AI system becomes progressively more effective with each cycle.

Five steps for rethinking the entire workflow for AI. (Napkin)

This framework moves the focus from automating isolated tasks to orchestrating a complete, outcome-oriented system where humans and AI play distinct and complementary roles.

Stop optimizing. Start reimagining. The future belongs to those who recognize that AI isn’t just a better hammer: it’s an entirely different tool chest.

One-Click AI Creation

ElevenLabs just launched a service called “AI Jingle Maker” that makes a jingle about a website based on nothing but its URL. One-shot creation! I tried it out to generate jingles about my own website and a few other famous UX websites (YouTube, 4 min.)

I didn’t like these songs nearly as much as the ones I made myself with Suno. But it’s interesting that ElevenLabs has a special website to make songs with no prompting except a URL. Minimizing user work will be big for AI, as we also saw when ChatGPT allowed people to convert any existing photo into a Studio Ghibli-style anime with no work.

Very old usability finding: as we lower the barrier to using a system, more people will jump that barrier and become users!

AI can one-shot the creation of a song with no other user action than giving it the URL of the website on which the song should be based. The less work we require of users, the more people will use a feature. (GPT Image-1)

AI Auto-Creates a Narrated Slideshow

One of the key abilities of current AI products is to transform content between formats. For example, I recently transformed one of my usability heuristics into an illustrated storybook that relied on storytelling instead of intellectualizing analysis to get my point across. As another example, I transformed another usability heuristic into a music video set in a 1940s nightclub.

Two concepts for my recent manually-edited videos about usability heuristics: (1) explained by Vikings, and (2) 1940s nightclub. (GPT Image-1)

Google’s NotebookLM has long had the ability to create podcasts where two synthesized “hosts” discuss a topic based on a set of written documents you provide. See, for example, the podcast Design Leaders Should Go “Founder Mode” I made based on my article about Founder Mode. These podcasts are called “audio overviews” and have become very popular, often for users to get a quick overview of a large body of text that they can listen to while exercising or commuting. Great use of AI’s multimodal capabilities.

One reason podcasts have become so popular is that you can listen while exercising. Still, adding video can make content more compelling and informative. (GPT Image-1)

NotebookLM recently expanded from audio overviews into video overviews: you can now upload one or more documents, and NotebookLM will automatically create a video about that material. Currently, these videos are very simplistic narrated slideshows, though Google has hinted at plans to expand into more highly-produced videos. Since Google currently has the best video model (Veo 3) for creating videos with sound about a topic provided in a text prompt, it doesn’t take a great leap of the imagination to imagine that they could, at a minimum, make great B-roll for these narration videos, and possibly something even better.

(For an example of the kinds of videos you can make with Veo 3, watch my video on The Error Prevention Usability Heuristic Explained by Vikings.)

I made one of these video overviews about the top 10 UI annoyances, based on my article on the same topic. For comparison, you watch an avatar video I made myself about the 10 annoyances, where I manually edited the manuscript and created appropriate B-roll clips to illustrate the points and keep the viewer’s interest throughout the video. (A 6-minute video is long on YouTube, where people have very limited patience and often stop watching in less than 30 seconds unless a video pulls them in.)

I recommend you watch both videos (AI-created vs. human-created) and consider the differences. Please let me know in the comments which you prefer.

Of course, when judging these two videos about the same topic, remember that the AI-created video was created with about one second of effort: click the button to make a video overview. The human-created video cost me several hours of time to think of ideas for B-roll (even though I obviously used AI to help in this ideation phase), make multiple variations of these B-roll clips, review the clips to select the best, and finally edit the lot together in CapCut.

Manual editing can still create more compelling videos than the one-click results from AI, but then that one-click operation is mighty compelling and time-saving. (GPT Image-1)

I feel that the NotebookLM slideshow is too boring: in particular, the slides are not well designed in either visual appeal or in the punchiness of their content. To slightly alleviate the viewers’ boredom, I added an avatar in the corner of the slides to add some liveliness to the video experience.

In contrast, the voiceover generated by NotebookLM is great, except for a few glitches when moving between sections. One can tell that the NotebookLM team has extensive experience creating engaging narrative voices. Whereas they use two bantering hosts for the audio overviews, the video overviews are voiced by a single narrator, but in a very lively manner. I think this synthesized voice beats the voice from ElevenLabs I used for my own avatar video. NotebookLM’s voice is very engaging and appropriately varied for the different segments it’s narrating.

Google currently delivers the most compelling voice in T2S (text-to-speech). ElevenLabs is probably the runner-up. (GPT Image-1)

The most engaging AI voice on the market currently comes from the bantering pair of podcast hosts delivered by Google NotebookLM. (GPT Image-1)

Changing the Wording of Questions in Standard Surveys

Can you change the wording of a question in a standardized survey if you feel that the standard phrasing isn’t optimal for your project? I would have thought that the answer was “no,” because we’ve often seen that minor copy changes can cause major shifts in user actions.

In a new article, MeasuringU presents recent data showing that minor edits can be acceptable and do not change answers to any measurable degree, meaning that the responses to the revised survey can be compared with responses to previous surveys with the former wording.

For example, the following question variants worked the same (all scored on rating scales from “strongly disagree” to “strongly agree”):

{Product}’s functionality meets my needs.

{Product}’s features meet my needs.

{Product}’s functions meet my needs.

{Product} does what I need it to do.

Of course, you can’t just edit wildly and expect respondents to react the same. For example, designs received lower scores when the question was edited for brevity to become:

{Product} meets my needs.

Apparently, the inclusion of words like “functionality” or “features” made people a little more generous. Without them, respondents seemed to judge the product relative to an idealized perfect product, as opposed to considering the specific features on offer.

It seems like an innocent simplification to change a question from asking about a product’s features to just asking about the product, but cutting this word changed people’s responses. (GPT Image-1)

The article concludes that one can edit standard questions as long as one takes care not to modify the scope or intensity of the question. However, I recommend refraining from such changes unless you have strong reasons why the standard wording is inappropriate for your study. Three reasons:

Most important, the standard wording has been refined over many iterations by folks like MeasuringU who specialize in optimizing research instruments. You’re unlikely to improve on their work unless you devote months to the effort.

Even though I accept the finding that survey wordings can be changed with care without adverse impact on the results, there’s always the risk that you aren’t careful enough. And you probably don’t have time or money to run an extra study to check whether the old and the new wording produce the same results.

You have better things to do than to mess with survey wordings, when you can copy an existing design. ROI is almost certainly higher from creating and testing one more iteration of your design.

Certain changes in the wording of standardized survey questions are acceptable and don’t change responses. But other small changes do undermine the findings. Better not change anything, unless you really have to. (GPT Image-1)

The Market Will Support Multiple AI Models

Insightful article by Anish Acharya and Justine Moore (my favorite VC) predicting market support for a wide range of specialized AI. Their main example is platforms for generating applications, more or less along the lines of Vibe Coding and Vibe Design. In this domain, we’re currently seeing successful products with different specializations, such as Lovable (mostly targeting design and prototyping) and Bolt (mostly targeting developers).

They also expect additional platforms to arise to target the “development” (or, more likely, the “vibing into existence”) of personal software that’s just intended for the user’s own use. (Excel and other spreadsheets could be said to be an early version of this, mostly aimed at personalized number crunching, though this type of personal-app platform is so useful that people have been torturing Excel into supporting applications for which it’s not that suited.)

This is in contrast to the “winner takes all” theory that’s popular among many Silicon Valley analysts and was somewhat true in the past. For example, with smartphone platforms, even Microsoft was not strong enough to break the Apple-Android duopoly, though Huawei finally seems to be achieving this feat.

The future of AI will likely be one of many specialized products with different UX designs targeted at different users and tasks, as opposed to a uniform future dominated by a few AI models. (GPT Image-1)

I believe it is likely that Acharya and Moore are right (and not just because I like most of Moore’s other insights). AI will soon account for half of the world economy, meaning that it will be used in so many ways that there is big alpha to be had from specialization, rather than trying to fit a single foundation model to all purposes.

Although they don’t develop this idea in much depth, I believe the UX angle will be particularly important for driving specialization. As they do point out, amateurs and hardcore professionals have different needs. Take something like music creation. An amateur like myself is happy with Suno, though I would probably create better songs with a UI with more scaffolding for users with little knowledge of music theory and no skills in manual music creation. On the other hand, a professional musician would benefit from more advanced features and detailed control.

10 Most Annoying UI Design Mistakes

New video about the 10 most annoying UI design mistakes that are still much too common on websites. (YouTube, 10 minutes.)

New video about annoying designs. (GPT Image-1)

Riddle Solution

In last week’s newsletter, I posted a riddle created with the GPT Image-1 native model. Here’s the solution:

Each building stands for the initial of the city in which it is located:

Udaipur: The City Palace

Sydney: Opera House

Athens: The Acropolis

Berlin: Brandenburger Tor

Istanbul: The Hagia Sophia

London: Big Ben

Istanbul: The Blue Mosque (Sultan Ahmed Mosque)

Tokyo: The Tokyo Tower

Yangon: The Shwedagon Pagoda

Using a usability riddle to comment on itself: this riddle is much harder because it’s not enough to recognize the buildings or to remember what they are called. The solution is indirect, using the names of the cities rather than the objects that are shown.

And here’s the hint I provided at the end of last week’s newsletter. Note how much easier it is to solve a direct representation. That’s a good hint for icon designers!

Jeans, Apple, Keys, Orange, Binoculars = Jakob. (GPT Image-1)